Intel, Microsoft Push Copilot AI to 40 TOPS on PCs

The landscape of personal computing is set to undergo a significant transformation as Microsoft’s Copilot AI service prepares to run locally on PCs. This shift, confirmed by Intel executives at the company’s AI Summit in Taipei, marks a new era in AI computing, where the processing power of Neural Processing Units (NPUs) will play a crucial role in enabling advanced AI capabilities on consumer devices.

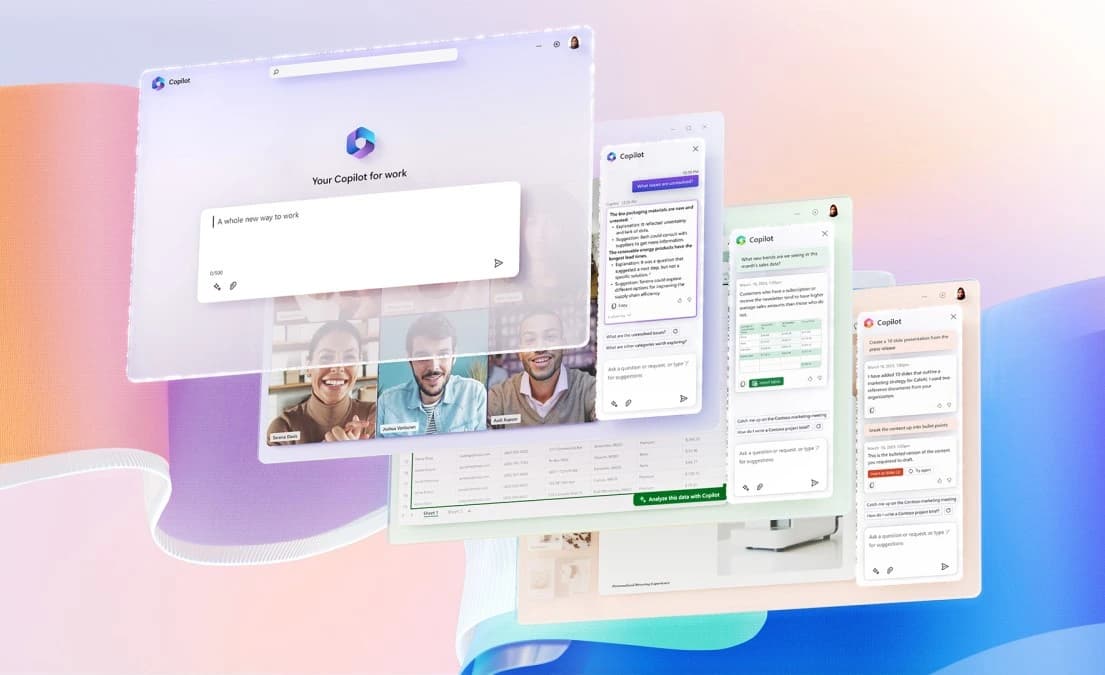

Microsoft’s vision for the future of AI computing involves a close collaboration with hardware manufacturers, such as Intel, to define the requirements for AI PCs. The new definition of an AI PC, co-developed by Microsoft and Intel, includes the presence of an NPU, CPU, GPU, Microsoft’s Copilot, and a dedicated Copilot key on the keyboard. This comprehensive approach ensures that AI PCs are equipped with the necessary hardware and software components to deliver a seamless and efficient AI experience to users.

One of the most significant revelations from Intel’s AI Summit is the confirmation of a 40 TOPS (trillion operations per second) requirement for NPUs on next-generation AI PCs. This performance threshold represents a substantial leap from the capabilities of current consumer processors, with Apple’s M3 lineup offering up to 18 TOPS, AMD’s Ryzen 8040 and 7040 laptop chips providing 16 and 10 TOPS respectively, and Intel’s Meteor Lake laptop reaching 10 TOPS.

The move to run Copilot locally on PCs is expected to bring several benefits, including reduced latency, improved performance, and enhanced privacy. By executing AI workloads on the device itself, rather than relying solely on cloud-based processing, users can expect faster response times and a more responsive AI experience. Additionally, local processing alleviates concerns about sensitive data being transmitted to remote servers, providing an added layer of privacy and security.

To meet the demands of next-generation AI PCs, chipmakers are racing to develop processors with higher NPU performance. Qualcomm’s upcoming Snapdragon X Elite, set to arrive later this year, boasts an impressive 45 TOPS of AI compute speed, surpassing the 40 TOPS requirement set by Microsoft. Intel, on the other hand, has announced its Lunar Lake processors, slated for release in 2025, which will offer triple the current NPU speeds.

As the AI PC market evolves, Intel is working to expand the number of AI features available on its silicon. The company plans to support 300 new AI-enabled features on its Meteor Lake processors this year, with a focus on optimizing these features specifically for Intel’s OpenVino platform. This strategy aims to provide Intel with a competitive edge by offering a range of Intel-specific AI features that leverage the capabilities of its NPUs.

The race for local AI processing is not limited to hardware advancements alone. Software optimization and developer support play an equally important role in enabling AI PCs to reach their full potential. Intel’s AI PC Accelerator Program aims to foster collaboration with the developer community, recognizing the significant market opportunity for new AI software as the company targets selling 100 million AI PCs by 2025.

While Microsoft’s Copilot will be compatible with NPUs from various vendors through the DirectML framework, the performance of AI workloads will ultimately depend on the TOPS capabilities of the underlying hardware. As a result, we can expect a “TOPS war” to unfold in the coming years, with chipmakers vying for supremacy in both silicon performance and marketing prowess.

The future of AI computing is rapidly taking shape, with Microsoft’s Copilot leading the charge in bringing AI capabilities to the masses. As hardware and software advancements continue to push the boundaries of what is possible with local AI processing, consumers can look forward to a new era of intelligent, responsive, and privacy-focused computing experiences. The race is on, and the winners will be those who can deliver the most powerful, efficient, and user-friendly AI solutions in the years to come.

Read More From AI Buzz

Vector DB Market Shifts: Qdrant, Chroma Challenge Milvus

The vector database market is splitting in two. On one side: enterprise-grade distributed systems built for billion-vector scale. On the other: developer-first tools designed so that spinning up semantic search is as easy as pip install. This month’s data makes clear which side developers are choosing — and the answer should concern anyone who bet […]

Anyscale Ray Adoption Trends Point to a New AI Standard

Ray just hit 49.1 million PyPI downloads in a single month — and it’s growing at 25.6% month-over-month. That’s not the headline. The headline is what that growth rate looks like next to the competition. According to data tracked on the AI-Buzz dashboard , Ray’s adoption velocity is more than double that of Weaviate (+11.4%) […]